Photogrammetry for games

Over the last decade, character models and environments in video games have come on leaps and bounds, not just due to game console hardware upgrades, but the adoption of digitization processes like photogrammetry. Using the technology, it’s now possible to create photorealistic worlds that provide an unprecedented level of immersion to a growing army of gamers around the globe. Those familiar with photogrammetry will know that it effectively sees various images of a target object collated and transformed into 3D models that feature an impressively high level of texture quality. But how exactly is this process being used in the gaming industry? In the following article, we lift the lid on the topic, covering everything from workflow steps to the prospect of using 3D scanning as an alternative.

Introduction

A video game being played on a smartphone

Before we get into applications, you may well be asking:What is photogrammetry?

In short, the process involves the taking of overlapping images of a target object from several angles. By identifying the same points in these photographs and ensuring consistent camera positioning, focal length, and distortion, you can get their coordinates, triangulate where they lie in a 3D space, and replicate them as 3D models.

In the world of video gaming, photogrammetry is increasingly being used to capture and add real-life props, locations, and even actors’ faces, into games. With the technology, developers are now not only able to build worlds with photorealistic graphics, they can do so at a fraction of the time it would otherwise take to create them manually.

Before turning to photogrammetry, graphics artists often had to start from scratch on such 3D assets, in a workflow that caused them to compromise, balancing aesthetics against gameplay and lead-time restrictions.

Traditional ‘white box’ design methods, in which coding needed to be continually tested as a game evolved around it, also created doubt as systems couldn’t be fully assessed until the project started to come together. By contrast, using photogrammetry, studios can now capture and upload models to create world ‘kits’ ahead of time. This helps developers to be better prepared and deliver on schedule.

Over the remainder of this article, we will break down the individual workflow steps some developers are undertaking to make this possible. We’ll also delve into the different technologies it’s possible to deploy as a means of carrying these out, and assess the potential advantages of each approach.

How is photogrammetry used in video games?

With high graphical fidelity and short launch cycles being key to success in the modern gaming industry, the benefits of adopting photogrammetry there are clear. As such, the technology is quickly becoming industry standard. But how does the whole process work in practice?

As with any other photogrammetry application, the workflow starts with an object being captured from various angles, in a linear, matrix-like pattern, with each photograph overlapping the next. When capturing larger objects, developers can choose to start by taking 360owide shots, before getting up close to capture fine details. But it’s generally easier to use long-range devices, as they’re better suited to this kind of application.

Photogrammetry being used in the video game industry. Image source: smns-games.com

That said, achieving all this is easier said than done, and game studios have to avoid a number of pitfalls in order to ensure they get complete models, with the right shape and proportions. For a start, developers need to consider the equipment they plan to use. If utilizing a high-resolution camera, they will have to make sure that photos overlap.

However, if using a Light Detection and Ranging (LiDAR) device, they’ll also have to consider an object’s base material. If this happens to be retro-reflective, for example, its surface is likely to bounce light back to the scanner’s receiver with minimal signal loss. On the flip side, if said object is shiny or opaque, it’s going to be trickier to digitize.

Key point

Having long been used in surveying to build topographic maps, photogrammetry is now proving a useful tool for video game developers creating 3D environments.

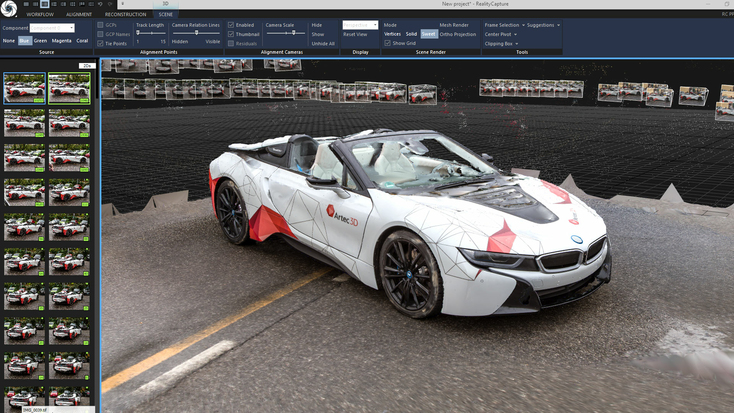

Once developers have tackled these issues and captured a set of completely overlapping measurements, they then need to process them, beginning with a program like Adobe Photoshop. Doing so allows aspects such as lighting and shadows to be tweaked so that end models match the tone of the game they’re designed to furnish. After color correction, these are often exported to programs like RealityCapture, where images can be aligned into a point cloud, and transformed into a textured 3D mesh.

Initial models can later be ‘retopologized,’ a process that sees the polygon counts of models reduced so they’re easier to render in-game. Finally, once the information stored in these 3D meshes is baked into texture files, their surfaces can be tweaked in a number of ways to make them appear more realistic.

You can combine them with ‘masks’ that add visual effects such as shadows and wear, and even use procedure or texture maps to better simulate object geometries, before exporting them into popular gaming engines such as Unreal Engine.

Examples of photogrammetry in video games

Photogrammetry itself is an evolving but not entirely new technology. The process has long been deployed in areas such as quality control and surveying, but it has only gained traction in gaming fairly recently. One of the first major studios to truly embrace photogrammetry was DICE, a Swedish developer charged with rebooting the hugely popular Star Wars: Battlefront franchise in 2015.

Facing a tight launch schedule, a demand for 60 FPS gameplay, and the need to get to grips with a new console generation, DICE was not short of challenges heading into production. However, the studio still wanted to create a world that was faithful to its source material. To achieve this, the developer delved into the archives to fish out props from the original Star Wars movies, before 3D modeling them with photogrammetry.

DICE Star Wars Battlefront video game characters. Image source: battlefront.fandom.com

Using this approach, DICE was able to more than half the time it took to digitize each asset when compared to its previous title, Battlefield 4. What’s more, the studio’s work allowed it to develop ‘level construction kits’ made up of Star Wars props, character models, and scenery, which formed the basis of the final game. DICE also found that its approach enabled it to establish a workflow well ahead of launch, something that enabled it to get ahead of internal deadlines.

Key point

Photogrammetry is not just used to build sweeping 3D environments, but many of their component parts, including props, foliage, and character models.

Since the developer’s pioneering work first came to light, other blockbuster video games have been built in a similar way. For its own relaunch of the Call of Duty Modern Warfare franchise in 2019, Infinity Ward discovered that it could use data gathered via photogrammetry to carry out a ‘tiling’ process that improved surface detail resolution.

As the development of the title continued, the studio went on to experiment further. In some cases, team members were scanned as a basis for corpse models, and they even used drone-flown scanners to capture larger environments. With photogrammetry ultimately enabling the rapid creation of prop, scenery, gun, vehicle, and character models, the project showed just how far the technology’s video game applications have come.

Photogrammetry software for video games

Earlier in this article we mentionedRealityCapture, but it’s not the only photogrammetry software that enables you to create 3D models for video games with a high level of accuracy. Industry-leading game developer Electronic Arts (EA), for instance, is known to usePhotoModeler. Utilizing the software’s Idealize functionality, it’s possible to remove lens distortions during processing. This is said to make it far easier to create crisp game and animation backgrounds that better match their source material.

The program also features an automatic point cloud-generating algorithm which yields models with highly accurate textures that can be exported in the widely used CAD format. That said, the program is not strictly built for photogrammetry. As a result, the software lacks the automated photo-to-solid workflow of dedicated competitors.

Car photogrammetry in RealityCapture

For those seeking programs designed for the digitization of stunning scenery, Unity marketsSpeedTree. Using everything from 3D art tools to procedural generation algorithms, the software allows plant life to be faithfully recreated. It’s therefore no surprise that it has been picked up by gaming stalwarts like Bungie and Ubisoft.

Likewise, Agisoft offersMetashape, a 3D model-editing software capable of processing elaborate image data, captured both close up and at a distance. While it’s more often used in research, surveying, and defense than video game development, the program’s auto-calibration feature and multi-camera support do make it an attractive option for those digitizing complex objects or creating large 3D environments.

However, there are a few drawbacks to using current photogrammetry packages. Many are marketed in tiers, meaning that in some cases, users will find they have to upgrade to access certain features. In others, programs may be missing functionalities altogether. This raises the bar to entry for new adopters, as it places the emphasis on them to know which supplemental packages they’ll need for a given project.

Photogrammetry of a mancubus, a monster featured in the classic Doom video game series

Elsewhere, if you’re looking for a broader, more accessible software, Artec 3D marketsArtec Studio. More often than not, the program is used to process the data captured by 3D scanners, and the latest version, Artec Studio 17, packs a number of useful features when it comes to optimizing the quality of resulting 3D models.

Key point

Software is vital to 3D world-building, and program choice often determines the way captured images can be collated and presented in-game.

The program’s Autopilot functionality makes it easier than ever for newcomers by offering to pick the most effective data processing algorithm for them, based on a few checkbox inputs. Artec Studio’s photo registration algorithm also allows users to improve model color clarity by applying textures using high-resolution photographs.

What’s more, with the platform’s alignment by scale algorithm, users can match the arbitrary scale of 3D models with that of a target object, based only on a handful of key geometric features. This can be especially handy when it comes to transferring textures to photogrammetry models made without the use of references, which don’t correspond to scale or have particularly detailed surfaces.

Overall, in tandem with Artec 3D hardware, these features streamline the process of capturing and converting data into accurate models, whether they be for things like inspection and reverse engineering, or video game, animation, and CGI development.

3D scanning as an alternative

While photogrammetry has proven a capable video game modeling tool, it does have its limitations. The accuracy of resulting 3D assets is dependent on the resolution of the cameras used to photograph the target object. Changeable weather, plus calibration, angle, and redundancy issues, can all lead to the creation of undetailed models. What’s more, capturing and combining overlapping images is naturally time-consuming. These issues raise the question: is there a more efficient 3D modeling method?

World War 3: Late-stage character development following Artec Leo & Space Spider scanning, Glock 17 pistol scanned with Artec Space Spider, Face scans made with Artec Leo

Those seeking to accelerate the process of creating 3D models for video games might want to consider adopting 3D scanning. Featuring impressive scan speeds of up to 40 frames per second (in no registration mode), cutting-edge devices like theArtec Leooffer photogrammetry users a potential means of accelerating their workflow.

In the past, studio The Farm 51 has achieved just this. By pairing the Leo with another 3D scanner, theArtec Space Spider, the developer has been able to create incredibly realisticWorld War 3 video game models. In fact, switching from the photogrammetry approach used to develop its previous titles, allowed the firm to generate each character, vehicle, and weapon model for its online shooter in just a few hours.

World War 3 gameplay and an early-stage character development scan being reviewed with Artec Leo

The popular Xbox video game franchise Hellblade also features full-body 3D models created via Artec 3D scanning. Developed with the help of motion capture technology at Microsoft-owned studio Ninja Theory, the game features a jaw-dropping in-game replica of lead actor Melina Juergens. Thanks to the Artec Eva’s impressive flexibility and accuracy, the developer was not only able to make the character appear lifelike but to design them with skin and muscles that moved much like a real person’s.

Nowadays, scale is no obstacle to 3D modeling either. WithArtec Micro, you can create digital versions of tiny objects, capturing intricate details with a resolution of up to 0.029 mm, before using them to populate in-game worlds. On the other end of the spectrum, the long-rangeArtec Rayhas already been used to3D scan an entire rescue helicopter. With the technology continuing to find new large-scale modeling applications, who’s to say it can’t be used to transport such vehicles into the virtual realm?

A 3D model of an MD-902 and the helicopter being 3D scanned with Artec Leo

Not ready to do away with photogrammetry entirely just yet? No problem, in some casescombining photogrammetry with 3D scanningis precisely the solution you’re looking for. This is facilitated by AS17, which allows users to merge images captured via high-end cameras with 3D scans. Adopters can also take advantage of the software’s photo texture functionality, a feature that enables models to be created with more accurate color capture data.

Conclusion

In light of the practical applications outlined above, it’s clear that both photogrammetry and 3D scanning are helping push graphical boundaries in the gaming industry. As each blockbuster built using scanning has relied on slightly different 3D platforms, there’s not currently an all-purpose solution that can repeatedly be rolled out to create models for any given video game.

Instead, studios are faced with a choice of hardware and software packages, with some designed specifically for game development, and others built for wider applications. When deciding between these, developers need to consider factors such as the type of environments they want to recreate in-game, as well as the graphical fidelity they want objects in their virtual world to have.

Fortunately for such creators, the rising adoption of photogrammetry and 3D scanning in gaming has led to the launch of products that meet varying needs. The technology’s proven ability to faithfully reproduce objects of all shapes and sizes, ready for uploading, tweaking, and integrating into virtual worlds, also makes it attractive for accelerating the development of new, cutting-edge games.

With this in mind, it’s an exciting time for photogrammetry and 3D scanning in the video game industry – it will be interesting to see how the technologies continue driving innovation there in the near future.